With the support of the NLnet Foundation, we have been looking at extending the Serval Mesh to support search and service discovery functionality on isolated mesh networks, i.e., without relying on internet access. This is what we talked about doing for this project:

Search and discovery have become invaluable elements of the modern internet. However, for communities experiencing transient or chronic loss of internet access, these facilities are not available. This can prevent these communities from being able to promote and find and utilise local services – which together can impair economic capacity and recovery. This project seeks to add a simple fully-distributed search and discovery mechanism to the Serval Mesh.

The COVID19, Cyclone Harold and the complete lock-down of Vanuatu has made it problematic to pilot the result with our South Pacific partners there. Testing will thus occur with IRNAS in Europe and also in Australia, where we have access to the Arkaroola Wilderness Sanctuary as a geographically large test site. The capstone output being a Ubuntu package for the Serval Mesh, including the new search and discovery functionality.

This blog post describes the work undertaken over the part months on this, to satisfy the first milestone.

We have come to take the ability to search for information and services on the internet for granted, precisely because they have become so useful and universally available. The trouble is that they require an extensive international infrastructure in order to operate. This means that they are not available in areas lacking communications infrastructure, or where communications infrastructure has been destroyed or denied.

It is not merely as simple as saying that we should create a distributed internet that supports all of the services that we currently expect from the internet, because global services require infrastructure to operate. Otherwise the provider’s of these services would have implemented them themselves in this way, because they would have been very happy to save all of the money that they otherwise have to spend on creating and maintaining that infrastructure.

However, if we narrow our focus down to how we can provide local search and discovery services, then the situation changes. Just as Serval’s SMS-like MeshMS service and local social-media system, MeshMB, are able to provide local services without requiring infrastructure, the challenge of this current project is how we can define and provide local search and discovery services built on the Serval Mesh’s distributed communications primitives. And that is exactly what this milestone sets out to do.

So let’s break this down into the three steps that we need to pursue to achieve this:

1. Identify the precise search and discovery services to be provided, including from both data provider and consumer perspectives.

So what do we think are the kinds of search and discovery services that we can implement over the Serval Mesh’s distributed networking primitives? Serval’s Rhizome distributed data system effectively works like a magic file share: Various users put files (or file-like objects we call bundles) into it, and those then get distributed throughout the network in an opportunistic manner. The result is an approach that provides eventual consistency, provided that there is sufficient storage on each node, and provided that there is sufficient opportunities for network communications.

The storage limitation is addressed by having a prioritisation system, so that the highest priority material is retained, if there is not space for everything. To date, the priority system has been relatively simple, with smaller files/bundles taking priority over larger ones. This could be easily extended to prioritize local content over remote content in a variety ways. This could include either geographic bounding boxes for content, that indicate that it is only of relevance in a given geographical area, a hop counter, or alternatively that content is tagged as belonging to a given community. Community tagging has the advantage that material could follow a community who were on the move. Neither is perfect, nor are they bullet-proof against various forms of denial of service measures, as is common for most mesh networking systems. Essentially any mesh network is very difficult to defend against a well-resourced aggressor. We will thus just focus on how we can add the ability to search and discover content on a mesh network.

The Serval Rhizome system supports both encrypted and non-encrypted content. Each bundle also has meta-data. At the most basic level, we can simply allow users to search this content, without describing any additional services or semantics. We should do this, but also consider services that can be created that intentionally support discovery and search. Also, from another perspective we should think about the types of discovery and search services that are desirable for infrastructure-deprived contexts. Ideally, by considering what is possible, and what is desirable find those capabilities that are going to be successful in practice.

From the possibility perspective, we need to formulate search and discovery systems that can work using only cached data. This leads to a positive characteristic, in that the time to perform a search can be very rapid in almost all cases, because no network latency will be involved. There is of course a negative aspect, in that if the data has not reached the device performing the search, than it cannot be searched. This is the fundamental limitation of the the Serval Rhizome data model, which is the trade-off of the ability to operate completely independent of supporting infrastructure. As with every other network model, the nature of the network influences the nature and architecture of the network services that can be delivered – especially if they are to perform well.

To help us identify services that are of interest to consumers, it’s probably a good idea for us to look at statistics relating to search engine use, such as from somewhere like these data from 2020:

https://www.statista.com/statistics/271599/most-common-online-search-topics-of-internet-users/

This lists the top 20 reasons for search globally, which has some limitations for our purposes, which we will discuss shortly, but let’s look at the top 10:

13% - Translate

11% - Social Network

10% - Entertainment

10% - Shopping

10% - Weather

9% - News

8% - Web Services

5% - Gambling

4% - Mail

4% - Traveling

Let’s start by saying that I don’t really have a strong opinion as to how reliable I believe these percentages to be, but the overall categories look reasonably sensible, although I am dubious when pornography doesn’t make it into the top-20 list at all. Perhaps that comes under “entertainment”, “shopping” and/or “Web Services” to some degree. But that’s rather academic for us, because we are not interested in creating an infrastructure-free pornography distribution service. In fact, history has shown that you can tell when a communications medium begins to be useful, precisely because it starts being used to carry pornography.

Rather, we are actually actively wanting to create a platform that doesn’t get overwhelmed by porn. This can be difficult, precisely because of the propensity for communications media to get used for this purpose. The services we are creating need to be crafted as intrinsically safe places, to the maximum extent possible. This is not always easy, and can never be done perfectly, but there are sensible measures that can be employed to help.

The corollary to this is that if you don’t take steps to make communications spaces safe, then they rapidly end up as very unsafe places. This has shown itself repeatedly, especially in recent history: CB radio with its near total lack of accountability consists in most places in large part of a stream of profanity and abuse, making it unsafe for families and young peoples – in spite of often quite strong laws that ban even the use of swear words on the medium. Then we have more similar examples to our area of interest, where online chat systems such as FireChat. I recall around the time it was released trying it out in the mundane environment of an Australian city, and even here, the lists of nearby users were eye-scorchingly offensive to read. This isn’t a complaint about FireChat per se (and they might well have great tools for dealing with this now), but really just an example of what I consider to be an axiom of human communications: If people are unaccountable for what they say, they will say just about anything, and a lot of it will be designed to shock, and a lot of it will be sexually explicit and abusive. If we want to create services that are going to be wide spread by civil society, rather than just “uncivil” users, then we have to take active measures to help nudge the use of the system in the right directions, and to reduce the potential for abusive use to be easy or attractive.

For example, the text messaging and neighbour discovery mechanisms already in the Serval Mesh are quite conservative about showing you random participants who are a long distance away on the network, to reduce the potential for unaccountable abusive activity, such as using offensive account names, and allowing random people to send you messages that appear together in your personally curated inbox. Instead, your inbox only shows content from people you have added as contacts, or in the case of the Twitter-like micro-blogging facility, only of people you have chosen to follow their feed. This creates a safe working place, where you can carry on your day to day activity, without fear of random anonymous abuse.

The trade-off is that you have to actively choose to go through your contact requests, when you want to add new people to your network. This makes it harder for social groups to self-organise, and necessarily means that you may have to make excursions from the safe space into the wild exterior. One way to improve on this, is to have a filter for truly “nearby” users, so that you can find people on the network when you meet in person. This could require direct network connection, i.e., that user’s devices are physically directly peered with one another, or use some other mechanism, such as a more relaxed hop-count limit, or perhaps better, to sort the list of potential contacts by hop-count and time since last reception, so that fresh local requests appear at the top of the list. Of course, this current work on supporting search and discovery mechanisms helps to directly address this issue as well, and we will keep solving this problem in mind as we design them.

Another approach that could help would be to have a mechanism to create closed communities, where a common passphrase or similar is used to tag network content as belonging to a particular community. There are also clever cryptographic mechanism to achieve this effect that don’t allow for a random unaccountable user to obtain and use the passphrase. Such approaches help not only to filter results to a manageable level in a large network, but also directly addresses some of the unaccountability that leads to what we might generally call anti-social behaviour on these platforms.

Hybrid approaches are also possible, where for example, only users in your verified social group are able to have image content displayed, so that the benefits of images can be used in a service or facility, but with reduced probability of this filling up with pornographic or other anti-social content. There is considerable potential solution space for such measures, well beyond the scope of this current project. But what is relevant to this project and should be included, is at least some exemplar mechanism for discouraging anti-social behaviour, and where necessary, excluding anti-social users and their content. That is, it must like the existing Serval Chat (MeshMS) and Serval Micro-Blogging (MeshMB) services follow a “safe place by default” approach.

2. Create several user-stories for how the search and discovery systems might be used in practice.

To create our user-stories, we will follow the Serval Project’s long focus on post-disaster response and recovery among the general public. We will thus imagine a situation where a community has had infrastructure damaged, and is without functioning communications infrastructure to reliably connect them to the outside world. Of course this scenario has strong parallels to what we see when people are living in regions of unrest as well, so it’s a good starting point for us. The following user-stories will walk us through an extended scenario in such a post-disaster situation. All names and locations are fictitious.

2.0 Scenario Background

The communities of Ulia, Luania, Saua and Kiatu live on, Tija, a long and thin island of 800 square kilometres at the northern end of the archipeligo, some 400km from the national capital of Ari Ula, and 90km from the next nearest populated island. The 3,500 people living on Tija enjoy life on their tropical island, located in the South Pacific. Kiatu, the regional capital, is the largest population centre on the island, with a population of just over 2,000 living in the town and surrounding villages. There is 4G cellular coverage in the town centre, with 2G coverage extending out to the surrounding villages. The cellular service, including internet access, is connected to the rest of the country via a VSAT satellite link. Luania is the nearest of the other communities to Kiatu, although it is still some 15km away. Depending on weather conditions, can sometimes use mobile phones from on the top of a nearby hill, that has line of sight to Kiatu. Saua is 7km on the other side of Luania from Kiatu, and has no cellular network access. Ulia is yet another 12km further on from Saua, and also lacks cellular coverage. There are NGOs or small government presences in Saua and Ulia, who have HF radios, that are used to provide a basic level of communications back to Kiatu and the national capital.

Tija’s location in the southern tropical zone of the Pacific exposes it to considerable geophysical hazards, including cyclone, tsunami and earthquake, like many other Pacific Island Countries. GDP per capita is relatively low, yet as has become common around the world, almost all families and most adults have access to a low-end smart-phone. The relative expense and difficulty accessing mobile internet means that the population is quite familiar with “side loading” of applications on their Android phones, as is the case in many Pacific Island Countries. The Tijans have a strong community structure based around traditional chiefdoms in each of the four communities, and work constructively with one another whenever disasters strike.

Because of their vulnerability, and isolation from conventional communications, a number of residents in each of the four communities on Tija already use the Serval Mesh peer-to-peer software on their Android smart-phones.

Our scenario begins with a 7.2 magnitude earthquake that causes substantial damage, especially in Kiatu, which is nearest to the epicentre. Fortunately the earthquake triggers only a relatively small tsunami, and there is no immediate loss of life. However the combination of the earthquake and tsunami have destroyed the cellular infrastructure and its satellite earth-station. It will likely take the mobile network operator a couple of weeks to restore service, as they are also having to proritise restoration of service to larger communities nearer the national capital.

2.1 Forming a community

With conventional communications to the outside world down, the Tijan communities need to find their own ways to communicate, so that they can coordinate their own response to the disaster, and generally keep their communities functioning as effectively as possible.

The larger community in Kiatu is particularly keen to enable local businesses to continue trading, and to ensure that the limited local resources can be readily used where required, e.g., building materials required to repair infrastructure, as well as the people required to undertake such work. They also have a need to identify where infrastructure is damaged, so that they have the situational awareness that will enable them to be effective in their response. Knowing where damaged infrastructure is will also be helpful for re-routing traffic and ensuring public safety.

The smaller out-lying communities are also keen to ensure that local communications is possible, so that they can ensure the well-being of their communities, and collate information about any damaged infrastructure so that this can be communicated to the regional and national capitals, so that remedial works can be carried out when the expected foreign aid support starts to flow into the country.

To support this, in each of the communities someone opens the Serval Mesh app, and uses the Create Community function to create a new local community on the mesh network. As well as a community name, a shared secret is set up that is required by participants who wish to contribute material into the community, but reflecting the friendly socio-political environment on Tija, the communities are set up to allow read and search access without needing the shared secret. Also in the smaller communities, the shared secret is set to something quite simple, such as 1234, as the communities prioritise ease of access over security.

In Kiatu, the larger population means that several different people have attempted to create the community, resulting in some initial confusion. Later in the day, the regional administration select one of those communities to be the official Kiatu earthquake response community on the Serval Mesh. Nonetheless, it takes a few days for almost all members of the dupliate communities to switch to the official community. These problems do not emerge in the smaller communities, as their populations are small enough that the normal village meeting mechanisms are able to lead a unified approach in each community.

At this point, there are four primary communities that have been created: Official Kiatu Earthquake Response, Ulia Earthquake, Luania Together, and Saua Saua. This is inaddition to the short-lived duplicate communities in Kiatu: Kiatu Earthquake Help, Earthquake Help, and Kiatu Together. However, there are relatively few users in each. Initially, the duplicate Kiatu communities have more users than the official community, reflecting the ability of grass-roots organisation to be faster than government processes, especially following a disaster.

2.2 Building a community

After creating the communities, individuals within those communities approach others and ask if they have joined the new communities, and explain to them how the communities can help them, by allowing the collection and sharing of information of relevance to them. For those who do not yet have the Serval Mesh on their phone, they side-load the application using the bluetooth tools for doing so that they are already familiar with, or they use the wifi-based sharing mechanism in the Serval Mesh app.

This process continues until all community members who wish to join a community have joined.

2.3 Searching for communities

Some community members don’t wait until someone invites them, but use the Search for communities function in the Serval Mesh app to find communities. Most users use the Find communities that my contacts are in function to do this in a safe way, that avoids the risk of seeing offensively named communities. Some users choose to search through all available communities, after clicking on a confirmation that unprotected searching may result in them seeing offensive material. Either way, when they find the community that they are looking for, they use Join Community, and if they make an error, or wish to otherwise leave a community, they use the Leave community function.

As members of the communities have family and friends in the other villages on Tija, they use the Search for communities function to discover and join the other communities that have been setup on Tija. Among those is Neire, who lives in Ulia.

2.4 Searching for people: User discovery

Neire helps to look after her grandmother, Iu. Neire helps her grandmother setup the Serval Mesh on her phone, and helps her to join the Ulia Earthquake community. She then uses the find users function, with the search scope set to only look for people in my community, to find herself on Iu’s phone, and to find Iu on her phone. They confirm that they can send each other text messages and voice messages. Neire then helps Iu to find other relatives who have joined the community, and adds them all to Iu’s contact list, so that she can easily communicate with them.

2.5 Searching for services: Service & Data discovery

Neire is helping with the recovery effort. As she can drive, the village chief allows her to use the village‘s truck to help move people and goods, as required. Neire uses the offer service function to advertise to each of the communities the availability of freight and transport services for the next three days. She offers this for free.

The truck has only a ¼ of a tank of Diesel, so Neire uses the service search feature to search for available sources of diesel fuel. She also uses the publish request feature to announce that she is looking for diesel fuel. Using this, after a short time, she receives a resource match alert, that contains a list of current offers for diesel fuel that have been made in the communities that she is participating in. She thus discovers that there is some diesel available in Saua, and prepares to head to Saua. Before departing, she searches the list of published requests from the Saua community.

By this time, more people have had time to make requests, and she discovers that Saua is running short on fresh drinking water, because the earthquake has damaged some of their water tanks, and the tsunami has caused contamination of several other water tanks. Neire thus arranges with others from her village to cart 900L of drinking water in a variety of containers. One of the other community members uses the offer resources function to announce the availability of ample fresh drinking water in Ulia. Finally, Neire and several of her extended family set off to Saua with the water, and to collect the diesel.

2.6 Searching for data/information: Data discovery

Other members of the communities use the search published requests and search services functions, both to find resources that they need, as well as to understand what resources and services they might be able to provide to meet unmet needs.

Boli from Luania notices that there are unmet requests for diesel fuel, because the only offers for diesel fuel are from a few community members who have diesel in portable containers. Boli assumes that this means that the fuel distribution centre in Kiatu was damaged in the earthquake or tsunami. Boli and several others in Luania have coconut plantations, and have hand-presses for extracting the oil from coconuts, which can be used in adapted diesel engines, provided that the weather is warm enough that it doesn’t solidfy. Fortunately, the tropical climate on Tija means that this is a very rare event. Boli thus uses the publish service and offer resources functions to offer diesel fuel and fueling services. Boli and the other coconut plantantion managers arrange for the production of several hundred litres of oil over the next 24 hours, and keep an eye on demand through customers and through the search published requests function.

2.7 Searching for places: Mapping and geoinformatic discovery

Meanwhile, Neire and her team are making their way down towards Saua. Along the way, they discover several places where the road has been damaged due to rock slides or erosion from the tsunami. Neire has one of her team use the crowd-sourced mapping function to mark these locations on the map, so that others will know about the damage, allowing them to avoid it, or to begin work on repairing them. Some of the damage is quite near to Saua, and after Neire arrives, she shows some of the people in Saua how to use the crowd-sourced mapping to search for damaged infrastructure, and search for resource offers to find the materials that they need to start repairing the worst of the damage.

Neire uses the crowd-sourced mapping to navigate to the locations where people had requested fresh drinking water and helps distribute this. She is also able to efficiently plan her route to include collecting the diesel fuel. The recipients of the water use the cancel resource request after they receive the drinking water. They have in the meantime logged their damaged water tanks on the crowd-sourced mapping function. After Neire has collected the diesel fuel, that offer is also removed, using the update resource offer function.

Neire now has enough diesel fuel to respond to several other requests for moving materials around Saua, including helping the road repair volunteers get their tools and materials to the nearest damaged location. She knows that she will still need more fuel, and has been keeping an eye on the resource match alert function, and discovers the offer of coconut oil for use as diesel substitute. She uses the Serval Mesh‘s MeshMS text messaging function to make arrangements to collect 300 Litres of coconut oil, enough to fuel her truck for the next couple of days. Because she is able to communicate with Boli, she knows when the coconut oil will be ready, and is able to help with other recovery activities beforehand. The crowd-source mapping function also lets her discover ahead of time the condition of the road, and allow sufficient time to get there, and to bring some repair materials and volunteers to stabilise the worst of the damage.

Neire arrives at Luania in the early evening, soon after Boli has her fuel ready. Neire uses the search for services function to find a place where she and her team can spend the night, before they continue helping their communities the next morning.

2.8 Searching by low-literacy user

Not everyone on Taji has a high level of literacy. Yeco is an older man in Luania who lives on his own when his family travel to Kiatu for work. This is the case following the earthquake. His family have not been able to return from Kiatu yet, as a bridge has been damaged by the earthquake, making the road impassible. However, Yeco is able to exchange voice messages with his family sporadically, as people walk from Luania and Kiatu to opposite sides of the damaged bridge, allowing messages to be automatically exchanged via the Serval Mesh. Thus Yeco and his family know that one another are safe. However, Yeco’s kitchen has been damaged, and so he will require assistance finding nutritious food. Based on instructions from his family, he opens the crowd-sourced mapping function, and uses emojis attached to the service offers and resource offers to find someone nearby who is offering food. He sends them a voice message asking if they can bring him some food. They ask him in a voice message to use the share location function, which he does, thus enabling them to deliver him food until his family are able to return from Kiatu.

2.9 Searching by visually impaired user

Ado is another elderly person living in Luania, and while she is literate, her eyesight has deteriorated over the years, making it very difficult for her to read. She is, however, able to use the vision impaired interface option to access basic functions of the Serval Mesh. This provides a text-to-speech interface, which allows her to access and send voice messages to her contacts. She also requires assistance with obtaining food while her family are busy with the recovery efforts. She is able to send them voice messages to let them know how she is going, and set their minds at ease, and that she has used the vision impared interface to listen to the various resource offers, and thus find someone nearby who is able to provide her with nutritious food, and to communicate with them using voice messages.

2.10 Summary

The above scenario and its embedded user stories show how communications in these sorts of situations can be very effective at helping vulnerable communities. The combination of the existing Serval Mesh functions, with the ability to share, discover and request services and resources makes it much easier for isolated communities to respond efficiently and with effective situational awareness in ways that have not previously been possible. Now our challenge is to collate the functions required to enable these improvements, and map them to the Serval Mesh‘s delay-tolerant networking primitives.

3. Map these services to the Serval Mesh Rhizome Delay Tolerant Networking Primitives, and work though several user-stories to confirm that the model is fundamental workable.

3.1 Mapping to Rhizome Primitives

Let’s start by summarising the functions that are referenced in the above user-stories. Then we can look at which ones are related, and how we can map these to Serval Mesh Rhizome Delay-Tolerant Networking primitives, excluding those already in the Serval Mesh, such as find user, or Send MeshMS text message. These collect into several fairly obvious groupings:

Community Management:

Create Community

Search for

communities

Find communities that my contacts are in

Join

Community

Leave Community

Services & Resources:

Offer Service

Offer Resource

Search for

Service [Offer]

Search for Resource [Offer]

Publish

request for service

Publish request for resource

Cancel

request for service

Cancel request for resource

Update

request for service

Update request for resource

Update

offer of service

Update offer of resource

Search service

requests

Search resource requests

Alert on

Resource Match

Crowd-Sourced Mapping:

Share to Crowd-Sourced Map

Show Crowd-Sourced

Map

Transcending these are the need to support accessible content, such as emojis for searching visually, and text-to-speech and emoji-to-speech (including in multiple languages). The mapping functions can be supported by including a geographic tag, which might represent a point, a locality or a geographic bounding box, depending on the circumstances. For simplicity, it probably makes sense to use Google’s Plus Codes as a succinct way to describe a location or locality.

At a lower level, it looks like we can fairly easily map these to Rhizome primitives. For each user, we can have a bundle that contains the current list of services and resources that they are offering and seeking. Each of these can be associated with a Plus Code, that locates the request in space.

One of the challenges that we will need to face, is the cluttering of the system, especially when people make offers or requests, and either forget to remove them, or perhaps move out of the community. In these cases, we don’t want these stale offers and requests to hang around, making the system difficult to use. To solve this, in addition to the Plus Code to locate the request in space, a time maker can also be added, that indicates when the request or offer is valid, with fairly tight maximum bounds. The complete bundle should include a claimed time of publishing, and the time markers should be relative to this, and limited to a maximum of, say, 3 days from that time of publishing. This is designed to be long enough for requests and offers to spread through the network, including in fairly difficult situations, but not so long that they can clog up the system and cause more trouble than help. Based on experience with working with humanitarian response organisations, 3 days is a very long time in a disaster.

Then we also have the crowd-sourced mapping elements, that are separate from the resource and service requests and offers. These can, however, be included in the same bundle, and simply encode points of interest. Again, a similar expiry system should apply, but probably with a longer expiry, perhaps allowing up to 14 days. After 2 weeks, the information is likely to be quite out of date. But the user can of course choose to re-publish the information.

This approach requires only one bundle per user, which will help the system be more efficient. This bundle should use a unique service field in the Rhizome Manifest that contains the bundle meta-data. The various functions then simply either modify a user’s own bundle, and re-publish it into Rhizome, or search the bundles in Rhizome that have this service field. No changes are required to the low-level functionality of the Serval Mesh.

The existing prioritisation scheme that prioritises small over large bundles will prioritise those bundles that contain only a single request or offer, over those that contain many offers. This is probably reasonable, and is certainly easy to explain to users. The software could guide the user to understand that if they want a “high priority” request, then they need to make only that request, and not others, and not also make offers. While imperfect, it is a reasonable approach where priority and user focus are interconnected in a manner that reflects real-life.

One challenge that has to be addressed is to ensure that each user can have only one such bundle. One approach to this is to have the Bunde ID (BID) of the service discovery bundle be cryptographically tied to the user’s ServalID (SID). This can be done in a hard 1:1 way, however, only one such bundle can exist for a user for all purposes, and that bundle is already used for sharing identity information on the mesh. Thus we need to use a looser coupling. This can be done, by requiring the User’s to sign the BID of the service discovery bundle to attest its authenticity. If multiple bundles for the same user are found, the search mechanism (and Rhizome storage system) can discard those bundles that are not currently valid in time (which the expiration scheme neatly enables), and if multiple bundles are valid simultaneously, then the bundle with the later publication time should be used in preference to any older ones. In this way, exactly only the most recent bundle will be valid for the search function.

This leaves only the community management functions. The communities could be managed in separate bundles, however, we can handle this in the same bundle, by allowing the inclusion of a number of communities that a user claims to be a member of. Each community name would be followed by a cryptographic hash that is the hash of the user’s SID, the community name, and the shared secret that attests membership to the community. The search functions then need only to filter the search results according to whether the service discovery bundle has any communities in common with the searcher.

This information can also be used to filter search results that are outside of the service discovery bundle. For example, to allow searching through all microblogging messages posted by members of a given community, or other content that they might have uploaded into Rhizome.

Together, this all means we need only one Rhizome bundle per user to support distributed search and service discovery. Circling back to our need to enable safe search spaces, this is also extended in a pleasingly simple way: You can search your contacts, or extend that to searching in one or more communities in which you participate, or you can search without filters. This allows a graduated transition from very safe to unsafe searching.

From an individual security perspective, it is acknowledged that this approach discloses to other users which communities a user is in. This could be partially concealed, by not including the community names in the service discovery bundle, but rather only the hash that can be interrogated to verify if a user is a member of a given community. However, this would only provide limited protection, because if anyone knew the name of a community, they would be able to test if a user was a member of that community. It would also require a separate community advertisement mechanism, if communities are to be able to be found. Those advertisements would then effectively provide the missing information required to determine the communities in which a user resides.

For more sensitive environments, it would be possible to make “silent communities”, where the community name is neither advertised nor included in the bundles. To provide security for communities, it would then be necessary to encrypt the payload of the service discovery bundle using a key derived from the shared secret of the community. This could be done by having the community membership attestment section of the bundle also include a decryption key that is encrypted using a key derived from the shared secret of the community. This would prevent people outside of a community from being able to search the service and resource offers and requests of the community, which is probably a good step to take.

This approach also has the advantage that members of a community can choose to change the shared secret at any point, without requiring any central coordination. They could choose to advertise membership with both the new and old shared secret for a period of time, to allow others time to update their shared secrets. Or alternative when the goal is to exclude an anti-social member, the old shared secret could be immediately discarded, and any updates to the service discovery bundles would render them unreadable by the excluded user (although they could still read any older version of the bundles for which they have access to the shared secret). This effectively provides a kind of forward secrecy for the communities. It also helps to expire stale members of the communities, who are not participating, helping with the data trimming aspects of the system.

Finally, we need to address the optimisation of bundles in the network, so that content for a community is prioritised over content from outside the communities that a device owner has joined. This will require tweaking of the Rhizome Bundle synchronisation process, rather than any changes to the data structures used. It thus falls outside the scope of this project, although it is highly desirable to have this implemented, as it will help with the scalability of Serval Mesh networks greatly.

So let’s now draw up a rough outline of what this service discovery bundle would contain, so that we can follow their contents for the example users in our previous scenario.

The key information types are:

1. Publication time and date of the bundle. This is used to indicate the beginning of validity of the contents of a bundle, so that a malicious user can't publish data that is "eternally valid", and thus clutters up search results forever.

2. A record for each community that a user has joined, and in which their service discovery bundle should be considered valid. These may have individual periods of validity, but always within the hard-limit of 7 days from the publication date of the bundle. Thus to remain in a community, the service discovery bundle has to be updated at least once per week. Again, this is to stop stale data clogging the network and search results. The maximum number of communities that can be listed in a bundle should be restricted to a relatively small number, perhaps 5.

3. A record for each resource request, service request, resource offer or service offer, again with each entry having a validity period. These records should include both a textual description of the request or offer, as well allowing the specification of a number of emojis to provide a language independent description that can be used to support both low-literacy and through the use of an "emoji reader" vision impaired users. For low-literacy users it should be allowed to specify a request or offer that consists solely of the describing emojis. The emojis can also be used to provide a convenient visual description of a record for placement on a crowd-sourced map display. To support this, these records should also include a PlusCode location identifier, making it easy to draw maps containing this information.

4. Records for the crowd-sourced mapping interface, that are not service or resource requests or offers, but rather situational-awareness markers, e.g., to indicate damaged roads or other infrastructure. These are otherwise similar to the request and offer records.

A data model with just these few record types are sufficient to support the various use-cases explored in the scenario in section 2. These will be fleshed out in a later milestone, once we have assured ourselves that they really are sufficient.

3.2 User Story Work-Throughs

To verify that the data model is adequate, I went through the scenario from section 2, and mapped out the information that would be required in the service discovery bundle for the key users in the scenario. To keep this manageable, I have focussed only on the service discovery bundle, and excluded any Serval MeshMS or other communications. I have also left out the expiration and validity duration information, as this is logically fairly straight-forward, and is orthogonal to the search and discovery requirements.

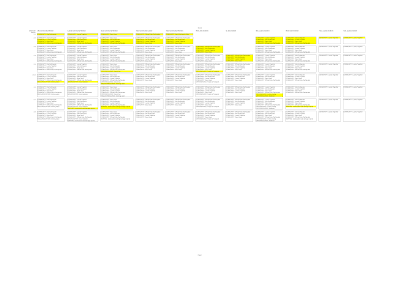

The result is the following image, where we have the key contents of the service discovery bundle listed for each of the sub-sections of the scenario. New pieces of data that are added to a user's service discovery bundle are highlighted in yellow when they are first added. The image is pretty big, so it is shown smaller here. Click on it to open it in full-resolution:

Using this diagram, we can trace through each user's activity. For example, we can trace Niere connecting to the network in 2.3, and then offering freight services in 2.5. Where she then seeks diesel fuel, we can see the offers of fuel from a Saua community member in 2.5, and then later from Boli in 2.6.

This approach to representing the data purposely doesn't show the act of searching for information, as that doesn't result in the further injection of data into the network. Rather, we can verify that the search will succeed, by visually confirming that the data required for the search is available at that point in time. For example, that Boli's offer for fuel is published in his service discovery bundle at or before the time that Niere searches for fuel in 2.6. That is, we have taken the approach of showing the data that resides in the Serval Mesh network to facilitate the searches -- which we can thus see that it does. Implementing the search facilities will come in a later milestone.

So that all looks sensible, so now we just need to document this on the Serval Project Developer's website, which I have just done, and that's all the items from this first milestone complete:

1. Define Search and Discovery Services and Data Model

This sub-task focuses on defining the user-facing search and discovery services that will be created, and how they will be mapped to the underlying Serval Mesh network primitives.

Milestone(s)

Identify the precise search and discovery services to be provided, including from both data provider and consumer perspectives.

Create several user-stories for how the search and discovery systems might be used in practice.

Map these services to the Serval Mesh Rhizome Delay Tolerant Networking Primitives, and work though several user-stories to confirm that the model is fundamental workable.

This milestone will be considered complete when the above elements have been completed, culminating in worked-through user-stories and documented data model, both of which shall be documented on the Serval Project Developer’s website (which will be upgraded to HTTPS in the process), as well as on the Serval Project blog (https://servalproject.org)